The way Google completes our sentences during internet searches is affecting our thoughts, says Tom Chatfield.

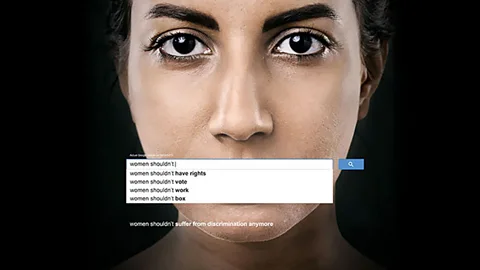

“Women shouldn’t have rights.” “Women shouldn’t vote.” “Women shouldn’t work.” How common are these beliefs? According to a recent United Nations campaign, this kind of sexism is sadly widespread, which is why they displayed these statements on a series of posters. The source? These statements were the top suggestions from Google’s “instant” search tool when people typed “Women shouldn’t…” into the search box.

Google Instant is an "autocomplete" service that automatically suggests letters and words to complete a query, based on the company's knowledge of billions of searches performed worldwide each day. If I type "women should," the top suggestion on my screen is "women shoulder bags," followed by the more troubling "women should be seen and not heard." If I type "men should," the mysterious suggestion "men should weep" appears.

The UN campaign argues that this algorithm offers a glimpse into our collective mindset—and it's a concerning one. But is this really accurate? Not in the way the campaign suggests. Autocomplete is flawed and biased in many ways, and there are risks if we ignore this. In fact, there's a strong case for turning it off completely.

Like many of the world's most successful technologies, the success of autocomplete is marked by how little we notice it. The better it works, the more its predictions align with our expectations—so much so that we notice it most when something lacks this feature, or when Google suddenly stops predicting our needs. The irony is that the more effort put into making the results appear seamless, the more genuine and truthful they seem to users. To find out what "everyone" thinks about a particular issue or question, you just start typing and watch the answer appear before you finish typing.Like any other search algorithm, autocomplete uses a mix of data points behind its smooth interface. Your language, location, and timing all play a big role in the results, along with measures of impact and engagement—not to mention your browsing history and the "freshness" of any topic. In other words, what autocomplete shows you isn't the whole picture, but what Google thinks you want. It's not just about truth; it's about "relevance."

This is before considering censorship. Google understandably blocks terms that might promote illegal activities or content unsuitable for all users, along with many phrases related to racial and religious hatred. The company's list of "potentially inappropriate search queries" is constantly updated.